Describing Video in Natural Language is Now Possible. Meet MXT-1

When my brother Frédéric and I started Moments Lab (ex Newsbridge) seven years ago, we were driven by a desire to redefine the way media teams in the broadcast and sports world manage, store and index their audiovisual content. Working in these industries ourselves, we constantly encountered the same issue: valuable time was lost simply searching for the right footage to build a story. Rolling through hours of rushes and sharing shortlisted clips was such a manual process, easily taking up 50% of a production team’s time. It simply wasn’t scalable or sustainable in a world where video usage was increasing so fast. Making content searchable became our ultimate purpose.

One of our earliest tech automations was a news workflow triggered by a QR code which acted as the identifier of the story in the NRCS. An editor would create a story, generate the code, and assign it to a journalist in the field.

This initial concept was only used for a short period of time, but it meant that from the very beginning, Moments Lab’s architecture was capable of analyzing video on live. Switching from QR codes to other modes of detection was then not such a giant leap.

In 2017 we started R&D with AI as a means to solving the video searchability problem, always trying to push beyond the state-of-the-art of AI indexing to enable media managers to better log and find their content. We’ve long been believers in the power of multimodal AI, and it has now developed into our new generative AI technology, MXT-1.

But let’s roll back to the start of our AI journey.

Our earliest prototype media indexing solution used AWS and Google off-the-shelf AI services to assess the value it could create with our first users. However, we realized quite quickly that the quality and cost was not aligned with the AI product that we envisioned.

These mono or unimodal AIs resulted in so many false positives. For example, while working on a cognitive indexing project for national public broadcaster France Télévisions, the AI mis-identified members of the European Parliament as baseball players. This is not so surprising when you consider that these companies weren’t (and still aren’t) designing their AIs for the news and sports industries. They cater to many use cases, and the key bottlenecks we encountered - price for use at scale and quality - were a barrier for adoption. We had many ideas, and set out to find a solution.

Assessing this media back in 2017, we knew that we could get an accurate match on the faces from publicly available data. A voiceover was explaining what was happening on screen, and we had the video’s metadata. Why couldn’t we merge all of this to at least improve the accuracy of facial recognition? We plugged in Wikidata, soon added transcription, then another modality and another, to eventually develop a multimodal AI.

We ultimately decided to completely remove off-the-shelf services, and build our own AI pipelines.

Our customers compelled us to keep pushing the boundaries of what was possible with AI indexing, including automatically logging and transcribing content as it was coming in on live broadcasts. Afterall, today’s live is tomorrow’s archive.

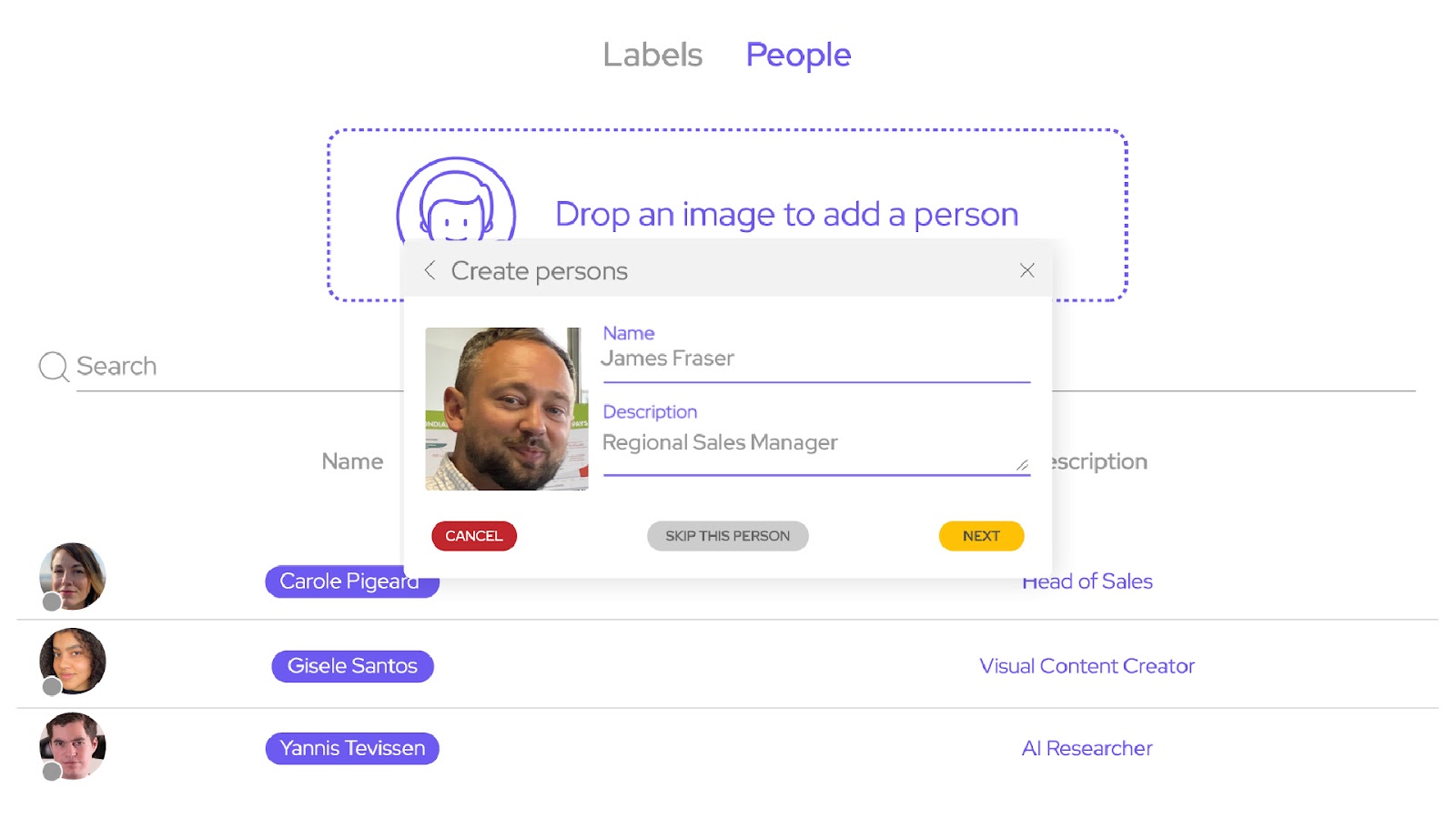

What if our tech could be trained to suit an organization’s specific needs? What if they could detect, label, tag and easily search for the people and places that mattered most to them?

Starting with TV channel M6 in 2018, we introduced thesaurus and taxonomy along with a semantic search engine that is error and plural tolerant. Users could connect and train Moments Lab AI on their own knowledge database, so that it would speak the same language as their media loggers. Navigating the jumble of tags in a media asset manager to land on the exact content you need is not easy without specific training. Semantic search empowers any user to navigate their media collection as quickly and easily as they search the web.

In 2021, a challenge set by football club Olympique de Marseille and rugby union side Stade Toulousain spurred our AI R&D team to crack the detection of logos based on pattern, not just OCR (optical character recognition). In other words, our AI doesn’t solely rely on the text accompanying a logo image to be able to identify it. The sports clubs also wanted an ‘open thesaurus’ so that they could train the AI to detect new sponsor logos in the future. We’re proud to say that this exists now too.

More recently, we conquered the limitations of AI landmark detection with the encouragement of Asharq News. Journalists generally rely on manual annotators or off-the-shelf AI landmark detection services to find footage of historic structures, monuments, cityscapes, and landscapes to build a story. These are limited and expensive.

With the help of language models, we’ve built a much larger landmark dataset, and can even detect when there’s an aerial shot. Because our MXT-1 AI automatically analyzes media more efficiently, we can be very competitive on cost.

It wasn’t just the price of specific AI detection elements that we needed to challenge. A common barrier to big and meaningful AI indexing is the prohibitive overall cost of the technology. Many organizations that want to embark on full scale live and archive indexing projects just can’t make the business case. This means that a significant amount of content remains hidden in their media collections, along with a potentially sizable new source of revenue.

It’s a problem that we’re pleased to say we’ve solved with MXT-1 through energy efficiency. Our goal was to cut computing costs while improving the AI’s quality. We discovered that by running our new AI on CPUs as much as possible over GPUs, we could reduce energy consumption by 90%.

Running a traditional AI stack is equivalent to approximately 30 cyclists riding at a moderate pace on flat terrain for an hour. Each cyclist generates around 100-200 watts of power. With MXT-1, we would need just 4 cyclists.

On the quality side, we’ve aligned video perception with the power of language models to accomplish quite incredible results. We know from our years working closely with archivists and documentalists that working with tags is difficult. Yes, tags do improve content searchability. But they often don’t align with how the human brain processes and assesses a video.

We wanted to drastically improve the user experience when working with AI. Adding natural language text that describes and summarizes video content just like a human would, was key.

MXT-1 understands, describes and summarizes video scenes. And it does so at a rate of more than 500 hours per minute.

News editors tell us that MXT-1’s scene descriptions are nearly indistinguishable from what their human media loggers are producing. This is not surprising when you consider that the very repetitive tasks of describing, transcribing and summarizing media must be extremely consistent to be effective. And unlike people, AIs don’t get tired.

Quality data can change the way you tell a story, as it brings fresh angles and insights. In this way, indexing a live or archived media collection is akin to an archaeology dig, only you’re shoveling bytes instead of dirt. I can’t wait to find out what incredible content gems our customers unearth with MXT-1.

MXT-1 is available now in beta mode. You can experience it for yourself and schedule a demo at NAB Show in Las Vegas. Book a time to meet or contact us to discuss your specific needs.

.png)