How Data Fusion Fuels Multimodal AI’s Ability to Detect Advanced Editorial Concepts

Although it has been studied for a long time, Multimodal AI has become a major trend in recent machine learning research and industry.

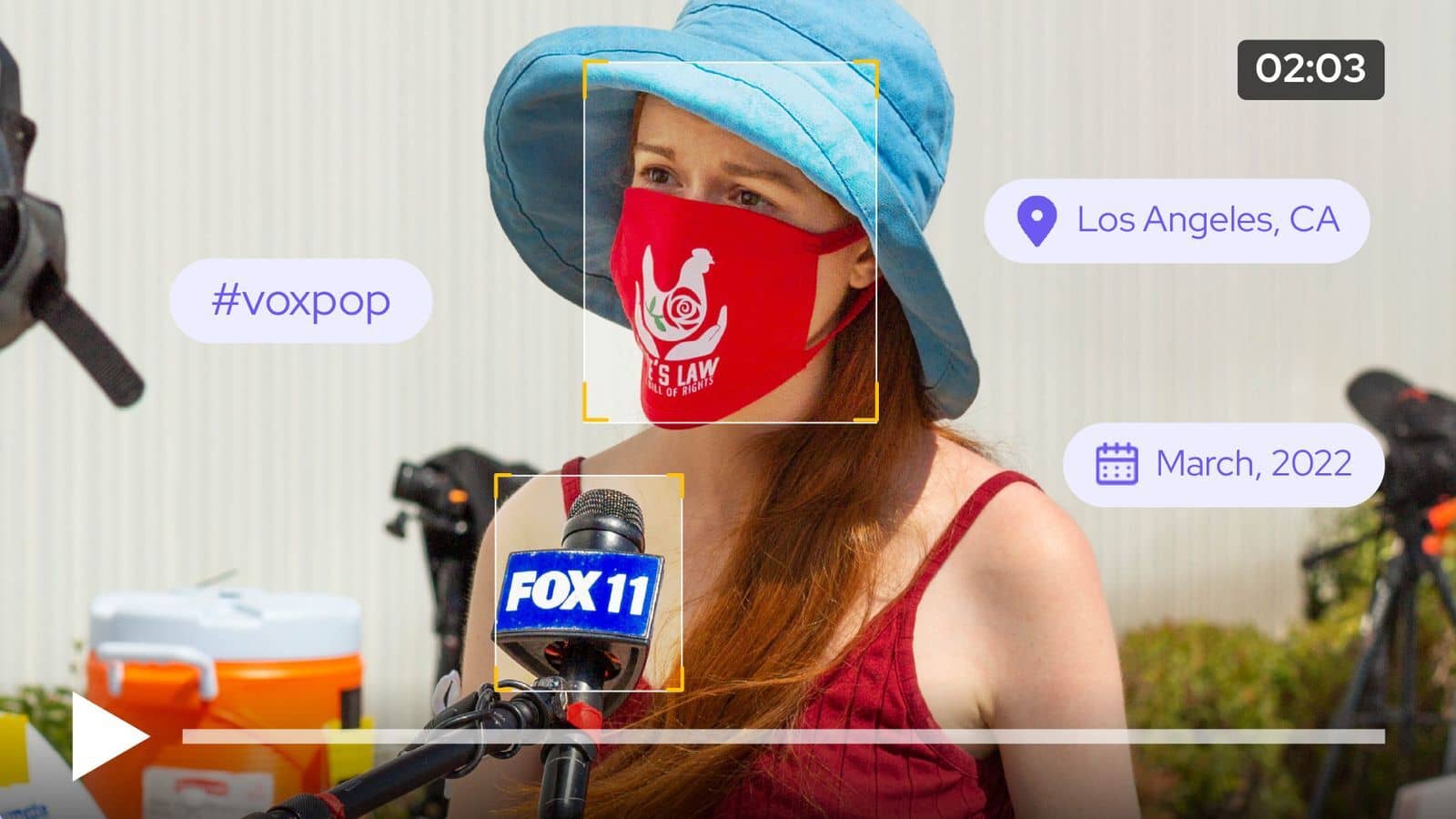

It is especially key to understanding the contents of video files, since the relevant information lies in various aspects of the recording. Sometimes you want to recognize a face, sometimes you want to understand what is said or what is written on a sign. But most of the time what you really want to understand is the context of what you’re watching.

This highly multimodal context can only be determined by multimodal AI pipelines.

In his position paper where he develops his thoughts and predictions for the future of AI, Yann LeCun recently wrote about the need to build what he calls world models. These world models can be seen as highly multimodal models that mimic the understanding of the world and the common sense that a child can build in the first years of their life.

But what really lies beneath the terms “multimodal model” or “multimodal AI”?

Building a multimodal AI that understands context means that you consider different inputs coming from various modalities. Although it can be more diverse, a modality can be seen as the equivalent of a human sense for a machine. To understand and analyze the world, human brains process data coming from their touch, sight, hearing, smell and taste. A machine can use its sensors to observe the world and gather data to feed to the multimodal pipeline. The machine then fuses these observations following a certain process to obtain a desired result.

This core process, which is the main engine of multimodal AI, is often referred to as fusion. In her book Information Fusion in Signal and Image Processing, Isabelle Bloch defines fusion as follows: “Fusion of information consists of combining information originating from several sources in order to improve decision making.”

Knowing that, one of the toughest and most crucial parts of building a multimodal AI system is choosing a fusion strategy. These strategies are very diverse, but we can classify them in two categories: Late Fusion and Mid/Early Fusion.

Mid/Early and Late fusion can be seen as different ways of crafting a tool. When you conceive a complex tool you can follow at least two different paths.

You may want to craft a tool with some parts that need to resist high temperature and some other parts that need to be rock solid. What you can do is use two different materials with the required properties, and build your tools with parts made of each material.

But in some cases, you have a second option. You could choose to fuse the materials together to make an alloy. Once you have your alloy, you can simply craft the tool out of it.

Now let’s view these materials as modalities (audio, image, text, metadata etc). In machine learning we have exactly the same crafting techniques.

- If you have multiple algorithmic pipelines that process your different modalities, you can just wait for them to deliver their results. And at the end, you define a new algorithmic layer to combine these results into a new innovative tool. This is called late fusion.

- However, if you directly mix the modalities, you can directly feed them to a neural network, for instance. The prediction obtained with the neural network can then be oriented to be your desired output. This is called mid or early fusion, and it’s like crafting a toy with an alloy.

Every multimodal AI pipeline needs its own fusion strategy, and choosing the right one can have a direct impact on performance.

When we need to tackle complex multimodal use cases here at Moments Lab, we study the different modalities available and implement the best fusion strategy to maximize our AI performances and detect complex editorial concepts.

Following this development pattern, we successfully managed to solve complex multimodal challenges, such as identifying a player's highlights in a sporting match or even pinpointing video footage of interviews that were conducted outside in city streets.

Overall, when chosen carefully, fusion strategies can make a big difference in improving the performances of multimodal AI pipelines and unlocking new use cases.

Want to know more about multimodal AI and how it can make your photo and video content searchable? Get in touch with us.

.png)